Hi, this is Rich from N.C. again, for the last explanation of a topic from the Introduction to Music Production class on Coursera.com.

Today I want to compare some different software synthesizers in Reason 6.5, and show you where the various components are located. I’ll be showing you the main parts: the oscillators (VCO), filters (VCF), envelopes (ADSR), low-frequency oscillators (LFO), and the amplifiers (AMP).

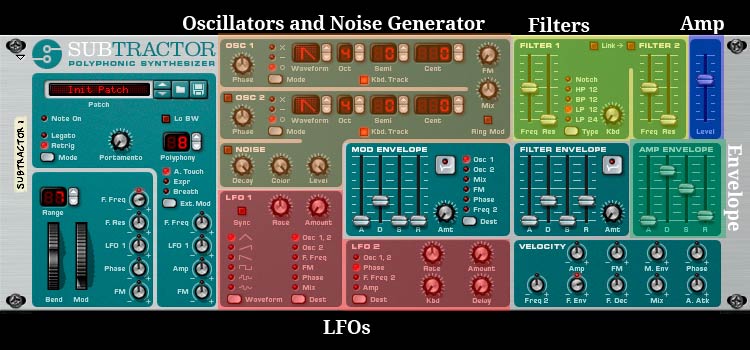

SubTractor

I’m going to start with a very popular software synthesizer in Reason, the SubTractor. It’s a self-contained synthesizer, in that the parts are all laid out in a specific order, and you can’t change it. You can change the values, but not the order.

If you’ve watched the videos in class, you’ve seen the SubTractor already.

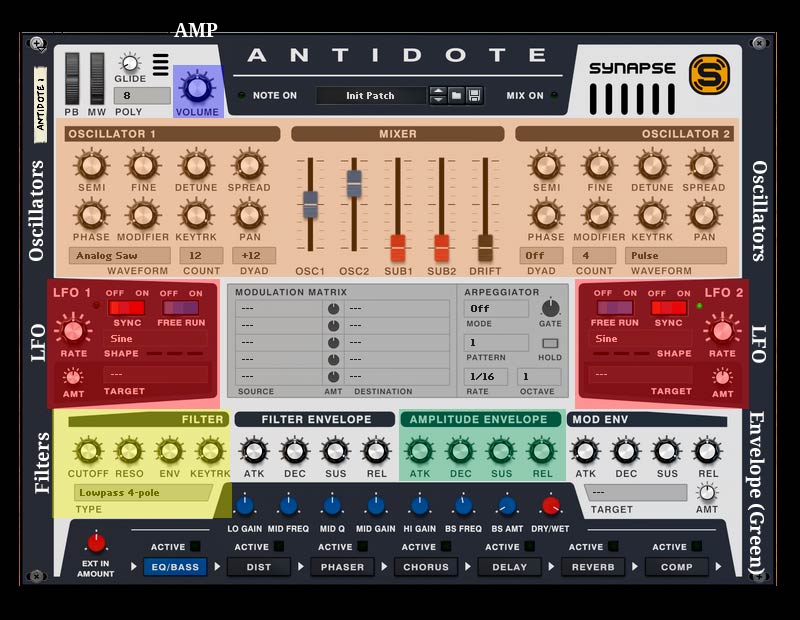

Antidote

Next up, one of my favorite software synths in Reason, Synapse Software’s Antidote. Antidote is also a self-contained synth.

The layout is pretty straightforward here. Note that I have highlighted the “Volume” knob as the amp, because there really isn’t an amp section on this synth.

Thor

Next up is another classic Reason synth, Thor. Thor is a semi-modular synth. It differs from a self-contained synth in that you can change some of the parts from a set of fixed selections, and you can change the routing of the signal through the synth.

You can see where filter 3 on the right has no filter in it. You can create a filter from a drop-down menu, or choose no filter. In the same vein, you can change all of the oscillators to one of six types: analog, wavetable, phase modulation, FM pair, multi-oscillator, and a noise generator.

I highlighted the global and amp envelopes, because both can affect the amplifier. Take note of the differing design of the global envelope. There’s a delay before the attack, and a hold before the decay. This sort of envelope can do some really neat tricks.

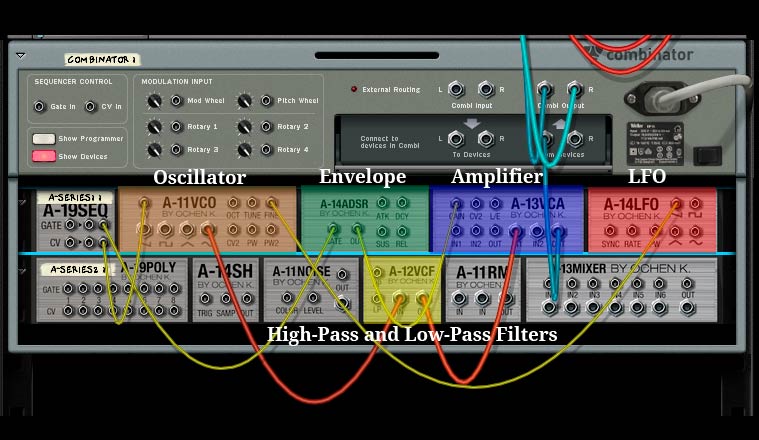

The A-Series

I’ll end with the A-Series synth by OchenK. The A-Series is a modular synthesizer, and the first one available in Reason. You can mix and match the various components however you please, and route them however you like. It’s a bit intimidating at first, but I think it’s a great way to learn how synthesizers work.

Here’s the front:

It looks simple and straightforward at first glance, but actually, each part of the synthesizer is an independent unit that functions on its own. To make a sound, you have to hook all of these units together, like this for example:

The green wires are virtual control voltage lines (or just CV), and the red and blue lines are virtual audio cables. You can see the signal comes in through the A-19 SEQ in the upper left, which receives the note on and value data from the MIDI keyboard. The gate carries the note on and note off MIDI signals to the ADSR envelope unit, which creates an envelope and sends it to the VCA (amplifier), which tells the VCA when to let the sound through (like controlling a virtual volume knob.)

The wire coming from the jack labelled “CV” on the A-19 SEQ carries the note pitch data. That goes to the VCO (oscillator) next to it, and the data tells the VCO what frequency to change to. The red cable carries the audio out to the A-12VCF, which contains a high- and low-pass filter, then the audio runs out from there to the amplifier. You select which waveform you want to use by running an audio cable out of it. I’m running a cable out of the sine wave oscillator. I could run cables out of all 4 oscillators to the A-13 mixer if I wanted to. (But that’s another demo.)

Finally, I connected the A-14 LFO (in the upper right corner) to the fine-tuning control of the VCO to create a little vibrato.

It looks complex, but you can learn a lot from it, and do even more. (You can even make an FM synth out of these!)

Reflection

I hope you learned a little bit about the software synthesizers in Propellerhead Software’s Reason 6.5. I decided to leave the filter and mod envelopes out of the discussion, and didn’t highlight them because they were a bit of an advanced topic.

You can also probably tell that I’m a big fan of modular synthesis.

This has been a very interesting and fun class. Good luck to everyone out there!